Are smart-city practices putting pressure on public values?

The five largest cities in the Netherlands – the ‘G5’ cities – use data and smart technology to improve the efficiency and effectiveness of governance, innovation and participation. But what does that mean for local residents? What happens to people’s autonomy and privacy if they’re constantly being tracked and nudged? Can city-dwellers exercise any control over the technology themselves?

This article is one in a series of three.

Digitisation can have an impact on societal and ethical issues. We wrote about this earlier in our report Urgent upgrade: Protecting public values in the digitised society. What about smart cities? How do they impact these values?

We interviewed ‘smart city professionals’ – project coordinators, trailblazers and strategic consultants involved in smart city and data-driven projects – in the five largest Dutch cities, i.e. Eindhoven, The Hague, Rotterdam, Amsterdam and Utrecht. We also interviewed civil society organisations in the same cities who are critical of the data-driven city. In our interviews, we further addressed specific ways to protect these values. We will discuss these strategies in next week’s publication.

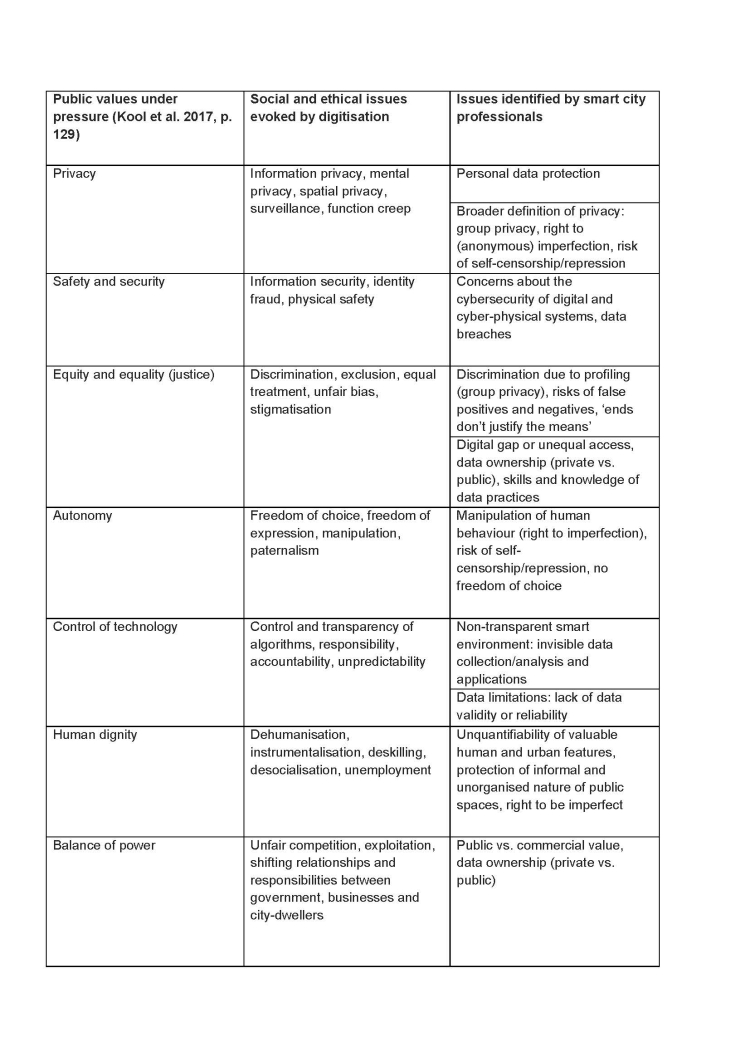

But first: what are the implications of smart city practices for online safety and security, justice, autonomy, control of technology, human dignity, the balance of power, and privacy? The table below lists public values and examples of smart city issues raised during the interviews.

Online safety and security

Even before the Petya cyberattack caused computers at some of Rotterdam’s container terminals to crash for several days, the smart city professional we interviewed in Rotterdam was asking himself how resilient the city is when systems are down. Other professionals also fear cybercrime and what it could mean for their city. They regard data system security as an enormous challenge. Some cities have already reported data breaches. Personal data theft and hacks of municipal systems can have a huge impact on the lives of individuals.

Justice (equity and equality)

Is collecting and analysing personal data justified, if we consider the cost and benefits to society? Civil society organisations question how many cases of benefit fraud have actually been detected using data analysis (e.g. interview with the Waag Society, Amsterdam). It’s a good question. The Municipality of Westland has stopped using analysis-driven systems to track benefit fraud, and although the City of Zwolle says that its system detects enough benefit fraud to cover the cost of data collection and analysis, that is as far as it goes.

Data collection raises questions. Who owns the data? Who can inspect the data, and who can use it? And who is knowledgeable enough to actually do so? The municipal governments of Amsterdam and Eindhoven are locked in negotiations with private companies about access and ownership rights to data collected in public spaces (interviews with the City of Eindhoven and the City of Amsterdam). Municipal projects sometimes depend on businesses that (unlike the municipal government itself) are capable of storing or analysing the data, and that may not always be desirable (interview with the City of Rotterdam). And even when data is accessible to all, it may not always meet the needs of all city-dwellers (interview with Creating010). In addition, not everyone has the computer skills required to read and analyse the data (interview with Waag Society, Amsterdam).

Another project in which justice plays a role focuses on optimising children’s appointments with the youth health care physician. At the moment, every Dutch child is examined by the youth health care physician at the same fixed intervals. Some municipal governments are experimenting with a more personalised approach using risk profiles. That way they can get a better idea of how much time should be set aside for appointments in each risk group, or how often a child should see the physician. This raises the issue of responsibility, however. Imagine, for example, that something turns out to be wrong with a child who has been assigned to a low-risk category. Is the municipal executive responsible, or the person who wrote the algorithm? (interview with the City of Utrecht). And does personalisation mean that certain groups will be structurally disadvantaged? Unfairly excluded or stigmatised? The smart city professionals whom we interviewed referenced examples from abroad in which data analysis had led to discrimination. They believe that data analyses and algorithms containing such biases should be avoided at all costs. Care should be taken right from the start, in the data-collection stage: what is being tracked, and in which categories (e.g. risk groups)?

Autonomy

Autonomy should not be taken for granted in the smart city, especially when local government entrusts more and more of its decision-making and actions to automated data- and algorithm-driven processes. That is what seems to be happening in China: in 2020, the Chinese government intends to introduce a compulsory Social Credit system that will rank every citizen based on their trustworthiness (interview with SETUP, Utrecht). One of the private systems on which China is patterning itself is Alibaba’s Sesame Credit system, which not only scores customers on how regularly they pay their bills but also on their spending habits: buying diapers boosts a customer’s trustworthiness score, while spending hours playing video games does the opposite (Botsman, 2017). What will happen to a Chinese citizen’s Social Credit Score if she criticises the government in a social media post? And what if one of her friends does? The Chinese government has stated that breaking trust will lead to restrictions, for example on holiday destinations (Botsman, 2017). Fortunately, no municipal or national authorities in the Netherlands have plans to introduce similar systems.

There are, however, various pilot projects that use data and new technology to influence behaviour. This can have implications for the autonomy of local residents or visitors. One example is the Stratumseind project in Eindhoven that we discussed last week. What happens when someone is messing about under a lamp post with his friends? When it is up to data systems and/or the parties using them to decide whether or not to influence behaviour based on available data, the subjects of surveillance have little choice but to supply the data without having consented to do so (Frissen, 2015). Digital housekeeping ledgers that automatically pay monthly bills also restrict people’s freedom to act because they cannot decide for themselves what to spend their money on. At the same time, avoiding extra debt and paying bills on time will give them more financial freedom in the long run.

Control of technology

The biggest problem with automated control is when the control is invisible, say civil society organisations (interview with ICX, The Hague; interview with the Waag Society, Amsterdam). That is what is known as a ‘black box society’ (see also Pasquale, 2015, referenced by various professionals). Do residents know what technologies are being used in their city? And do they know what these technologies could mean for them?

That is in fact not always very clear in real-life situations. There are a few signs in the Stratumseind district that indicate the presence of a camera (interview with DATAstudio, Eindhoven). They are meant to let people know that data – at least, data of a certain type – is being collected, but they say nothing about how that data is being analysed or used. The presence of technology in public spaces is also obscured by the use of veiled language such as ‘explosion sensors’ instead of microphones (interview with SETUP, Utrecht). Civil society organisations are highly critical of this lack of transparency in projects.

To control technology, it’s also important to acknowledge the limitations of a data-driven approach. Sometimes the patterns that the data reveals are pure coincidence or problems occur so irregularly that they’re impossible to detect with Big Data analyses (interview with the City of The Hague). These organisations believe that professionals working for the municipal government must control how problems and interventions are defined and not leave such definitions to (self-learning) algorithms, for example.

Focusing blindly on data-driven solutions and optimisation also easily excludes other solutions (interview with Creating010, Rotterdam). One example is using traffic flow optimisation systems to reduce traffic congestion and the associated urban air quality problems in city centres. An alternative would be to restrict city centre access to pedestrians and cyclists, but that is not something that the data will suggest.

Another issue concerns data quality, validity and reliability. Are we measuring what we actually mean to measure? Can we repeat the measurements and get the same results? It turns out to be very difficult to assess the reliability of data that comes not from sensors but from public records (process data) (interviews with the City of The Hague and the City of Rotterdam). One example of suspect validity was a project measuring loneliness among the elderly by tracking the number of social interactions they had. However, a more important factor for the elderly is not the number of social interactions but their intensity (interview with DATAstudio, Eindhoven). Some smart city professionals were aware that data can never be entirely neutral, but must always be interpreted within a specific social context, with technological and methodological limitations always playing a role (interviews with the City of Utrecht and the City of The Hague).

Human dignity

There is growing awareness that the smart city is not about new technology as much as about people and how they cohabit in their social and cultural setting (Creating010, Rotterdam). Can data actually represent people and their state of wellbeing? Various smart city professionals find it risky to use dashboards to reduce complex phenomena to one or two figures. Besides the risk of oversimplification, they also cite the aforementioned concern about bias in data collection, analysis and use (e.g. interviews with the City of Amsterdam, The Hague and Utrecht). The picture that then emerges is not an accurate reflection of social reality but it may nevertheless form the basis for policy decisions, with consequences for the city’s inhabitants.

The implications of data-driven city management will be felt most keenly by people who deviate from these standards. The result could be social chilling or social cooling: people conforming to the prevailing standards, toning down their behaviour, avoiding risk and repressing their creativity (interview with SETUP, Utrecht).

There is a contradiction here between the worry about the limitations of datafication and the hope that data will provide a more objective basis for decision-making. Even internal management struggles with this dilemma. Data often serves as input for work meetings between the staff and managers of the smart city projects that have been undertaken so far. That has implications for staff evaluations. If one staff member handles ten cases and another sixty, the first will have to explain himself (interview 2 with the City of The Hague). Once again, the question is whether the figures furnish sufficient insight into the complexity of that staff member’s work. And to what extent are staff allowed to be ‘imperfect’ and deviate from data-defined standards?

Balance of power

Cooperation between government, public service providers, businesses, knowledge institutions and, in some cases, local residents has led to shifts in their mutual relationships and responsibilities. The balance of power is not entirely fixed from the very start; it is part of the learning process.

According to the smart city professionals, there are too many ‘supply-side’ projects and not enough demand-driven ones. For example, in real life it is far from easy to encourage IT firms and local prosperity while also tackling community issues.

Data ownership is another critical question. Businesses are eager to obtain data (interview with City of Eindhoven) and – unlike the municipal government – they already have the capacity to store and analyse it (interview with the City of Rotterdam). Municipal governments are looking to exercise more control and find alternative ways to regulate ownership.

Privacy

Privacy was mentioned in every single interview, but the professionals also stressed that it should not become the overriding issue in the smart city debate, mainly because such projects analyse group and area data and only rarely make use of personal data. Individual privacy only becomes a factor when the analysis involves qualitative information, for example the sort of open questions that people answer when registering with a public organisation.

Nevertheless, there are two points that can be made about this. Big Data is about group profiling, but cross-linking several group profiles can generate a considerable amount of information about individual local residents. In that sense, group analyses can have consequences for individuals as well (interview with DATAstudio, Eindhoven).

In addition, some professionals believe that the right to be imperfect has a place within the concept of privacy. How much leeway is there for alternative or creative behaviour when behaviour is being optimised to conform to existing ideals? (interview with SETUP, Utrecht).

Data-driven control requires safeguards

The smart city not only requires us to look closely at privacy and security, then, but also at the broader protection of individual human dignity. Generally speaking, the civil society organisations that we interviewed were quite sceptical about whether municipal governments were aware of the risks of digitisation and working with smart technology. They believe that expectations are running too high and that ‘no one wants to spoil the party’. However, the five largest Dutch cities are developing all sorts of strategies or have already put them into place, from regulatory measures to communication and from organisational changes to technical measures. We will discuss these next week in the final part of our series: ‘How are municipal governments protecting public values in the smart city?’.