Few technologies provoke as much discussion as generative artificial intelligence (GAI). Systems such as ChatGPT and Bard have taken us a step towards computers that can perform countless tasks, but how far artificial intelligence (AI) extends is unclear. Some experts think that computers are becoming so powerful that they threaten the survival of humanity. Others think this is exaggerated, or point to important short-term risks such as prejudices and incorrect output.

This scan takes stock of the situation: what is GAI, what is it currently capable of, and what may it be capable of in the future? What opportunities, risks to public values, and policy options are associated with it? The scan – which is intended for policy-makers and politicians – was carried out at the request of the Dutch Ministry of the Interior and Kingdom Relations, based on a short-term study involving a review of the relevant literature, workshops, and interviews.

Authors

-

Linda Kool MSc MACoordinator

-

Bo Hijstek LLMResearcher

-

Quirine van Eeden LLMResearcher

-

Djurre Das MscSenior Researcher

Why publish a Rathenau scan of generative AI?

The term “generative AI” (GAI) refers to AI systems that can create content automatically, at the request of a user. You can ask such a system to produce a summary, for example, or create a picture in the style of Van Gogh. Since the launch of ChatGPT in November 2022, millions of users worldwide have been experimenting with this technology, and it is already impacting society while expectations of what it will bring are high. The present scan provides an overview of the possibilities and risks associated with GAI, and potential policy actions.

Is generative AI something new?

Generative AI builds on existing AI technologies and is a subset of learning AI systems. At the same time, generative AI systems have a number of distinctive features:

• first, they are significantly better at language than other AI systems;

• second, they can work effectively with different “modalities”, such as image, sound, video and speech, and even such things as protein structures and chemical compounds;

• third, generative AI systems receive general training, which provides the basis for all kinds of specific applications.

Subsequently, GAI systems can perform many different tasks, unlike many other AI systems that fall into the category of “narrow AI”, and are trained for just one specific task.

What can you do with generative AI?

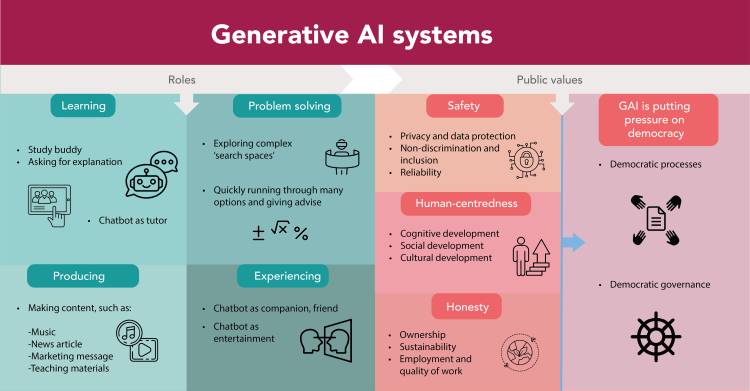

In the present scan, we distinguish four roles that GAI systems can fulfil. A GAI system can be deployed as:

1. a learning tool: for example to look up information or to act as a source of information when doing one’s homework;

2. a production tool: the system creates something at the behest of a user. Many people are already experimenting with this in the workplace;

3. a solver of complex problems: for example in science, with GAI systems helping fold protein structures, for example to support developing new types of medication;

4. to create an experience: some users find it enjoyable or fascinating to interact with GAI systems, which can take on the role of a companion. For example, someone has already created a chatbot that imitated a deceased loved one.

Despite these possibilities, the technology has its limitations. Generative AI systems are based on statistics, and thus calculate the most likely answer. This may lead to incorrect answers or discriminatory content. The underlying algorithms are also so complex that people can only understand how they function to a limited extent – and that includes those who have developed them. As a result, the technology is not yet good enough to be deployed in important processes, such as medical diagnostics.

What is at stake with the rise of GAI?

Generative AI involves numerous risks that can put public values under pressure. In this scan, we have grouped those risks according to three themes. First, there are concerns about the safety of GAI systems: they can violate users' privacy, express prejudices, and provide false information. Moreover, they are so complex that developers and external parties cannot fully understand how they work, making it difficult to prevent risks, whether now or in the future.

Second, there is the question of how human-centred the systems are: what will they mean for our cognitive, social, and cultural development? Will chatbots encourage creativity? Will we unlearn social skills if we frequently interact with a GAI system? Will we genuinely process grief through chatbots that imitate our deceased loved ones? In short: what does it mean to be human in a world of robots?

Third, there are concerns if the distribution of benefits and burdens is equal and just: who benefits from GAI systems? Who bears the costs, for example in term of who’s job, and nature of that job, will be affected? How do we protect the work of the creative professions? Which jobs are going to change, and how do we ensure decent work? And how do we deal with the environmental impact?

Finally, we identify a central feature, namely the impact of GAI on our democracy. GAI can hamper democratic processes, such as public debate and political decision-making, and because of the increasing power of a few tech companies, it can affect the ability to exert democratic control over digital technology in many domains of society.

In the present scan, the Rathenau Instituut concludes that generative AI amplifies risks within digital society and also introduces new risks. In recent years, policy-makers at national, European, and international level have been working to steer AI in the right direction, with the EU’s forthcoming AI Act as important policy instrument. It is unclear, however, how the open norms set out in that legislation regarding respect for human rights will be given shape in actual practice. When, for example, has the risk of discrimination been reduced to an acceptable level? And for whom is it then acceptable? It is also open to question whether other legal frameworks and policies adequately address the risks associated with generative AI.

The key question is therefore whether the policy efforts that are being made are actually sufficient. There is a real possibility that current and proposed policies may be unable to cope with the impact of generative AI systems, for example as regards non-discrimination, security, disinformation, competition, and exploitation of workers. It is therefore imperative that the Dutch government sets out a strategy for improving society's grip on this technology. Doing so should start by evaluating Dutch and EU policies and testing where they need to be strengthened. It is also important to provide maximum support for regulatory oversight, to make arrangements with developers, and to warn society about the risks of GAI. Globally, those risks are indeed being taken seriously; every individual and every institution in the Netherlands must do the same.

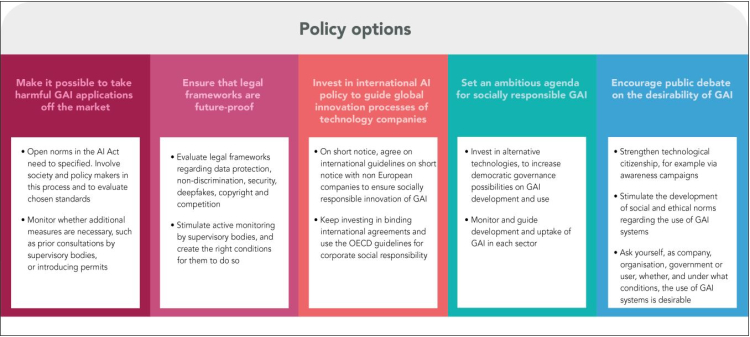

The Rathenau Instituut formulates five courses of policy action for the government:

- Make it possible to take harmful GAI applications off the market;

- Ensure that legal frameworks are future-proof;

- Invest in international AI policy to guide global innovation processes of technology companies;

- Set an ambitious agenda for socially responsible GAI;

- Encourage public debate on the desirability of GAI.

This scan takes stock of the situation: what is GAI, what is it currently capable of, and what may it be capable of in the future? What opportunities, risks to public values, and policy options are associated with it?

The scan – which is intended for policy-makers and politicians – was carried out at the request of the Dutch Ministry of the Interior and Kingdom Relations, based on a short-term study involving a review of the relevant literature, workshops, and interviews.