Policy for AI in health care: a balancing of values

In the blog series 'Healthy Bytes' we investigate how artificial intelligence (AI) is used responsibly for our health. In this second part, Ron Roozendaal, director of information policy at the Ministry of Health, Welfare and Sport (VWS), describes the ministry's AI policy. VWS strives to seize opportunities to improve healthcare using AI. To achieve this, values such as autonomy, solidarity, privacy and non-discrimination must be safeguarded. The ministry stimulates an open discussion about the consequences of artificial intelligence in health care.

In short

- How is AI used responsibly for our health? That's what this blog series is about.

- The ministry of VWS strives to seize opportunities to improve healthcare using AI, and safeguard values such as autonomy, solidarity, privacy and non-discrimination.

- The ministry stimulates an open discussion about the consequences of artificial intelligence in health care.

Looking for good examples of the application of artificial intelligence in healthcare, we asked key players in the field of health and welfare in the Netherlands about their experiences. In the coming weeks we will share these insights via our website. How do we make healthy choices now and in the future? How do we make sure we can make our own choices when possible? And do we understand in what way others take responsibility for our health where they need to? Even with the use of technology, our health should be central.

New questions

Artificial intelligence is in the spotlight. The Strategic Action Plan AI, among others, sets out the government's ambitions. NWO, ZonMW and companies are investing in field research into AI, because applications can only be tested through practical experience. This research also has to answer countless questions: How does AI contribute to people's health? Who can successfully apply AI in healthcare? What are the risks associated with the use of AI and can these risks be sufficiently reduced? At the Ministry of Health, Welfare and Sport (VWS) we also look at legislation and regulations. What are the barriers to using data and what guarantees are needed?

Doctors have to continue learning when they start working in healthcare, while algorithms should stop learning after they have been approved, because their procedures should not change. What do we think about that? Do healthcare professionals dare to take responsibility for the diagnosis of algorithms? Do we wish to - and can we - know exactly how algorithms work, or do we mainly look at the results? In many cases, algorithms are now trained with data that was collected abroad. Do they apply to patients in the Netherlands? And how do we ensure that data from Dutch patients can also be used for research, while maintaining safeguards such as privacy and security?

These are new questions to which the answers have yet to be found.

Seize opportunities, secure values

The challenge is to apply artificial intelligence for better healthcare in a way that does justice to the public values we want to safeguard. Think of autonomy, solidarity, privacy and non-discrimination. That is the aim of VWS.

There are pioneers, such as Pacmed and the UMC Utrecht. Pacmed originated from the National Think Tank 2014 on big data. Pacmed develops big data solutions in healthcare. The UMC Utrecht has developed an AI application within psychiatry. Floortje Scheepers, Professor of Innovation at the GGZ, uses the PsyData project to build algorithms based on data from the Electronic Patient Record to help choose the best medication for patients. Together, we will have to give new applications a proper place in healthcare. It is a quest that the Ministry of Health, Welfare and Sport wants to support.

The Ministry of VWS considers it important that sufficient patient data is available for research in order to improve care with new AI applications. The ministry has stimulated the creation of MedMij. MedMij is a Dutch appointment system that makes it possible for patients to digitally view, manage and securely share their health data with care provides, which is stored in different places, in one complete overview (the personal health environment PGO). MedMij is now a member of the Data Sharing Coalition, a cross-sector collaboration to create an appointment system for the safe and responsible sharing of data. The ethical and legal frameworks concerning artificial intelligence already partly exist. For example, the General Data Protection Regulation (GDPR) prescribes how privacy must be protected and the Medical Devices Directive (MDR) sets requirements for software that is used as a medical device.

Healthcare institutions must also safeguard values in their quality systems and ethical review committees to enable their employees and patients to use AI responsibly. This requires awareness of the values that are at stake and the ability to use AI applications, both on the part of the healthcare professional and on the part of the patient.

Balancing values together

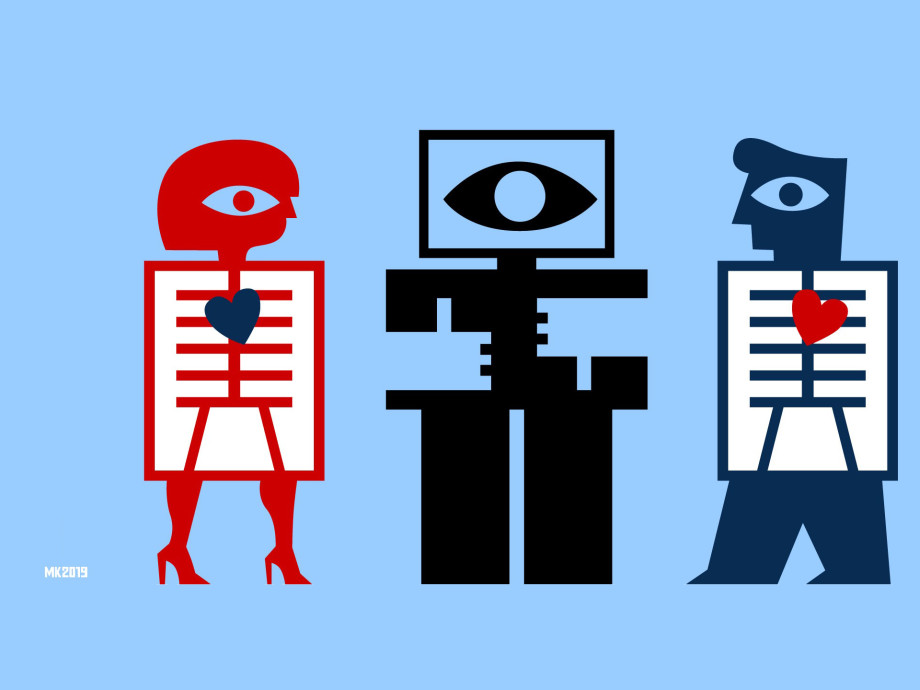

In the report Digital docters the Centre for Ethics and Health poses ethical questions. Is it ethical not to apply new AI applications - even if they are proven to benefit patients' health? When applying artificial intelligence, a balance must be made between the quality of care and public values, such as autonomy, transparency and solidarity.

The Ministry of VWS has made this balancing of values explicit in the Letter to Parliament ‘Data laten werken voor gezondheid’ (Let Data Work for Health). Patients are in control of their patient data, but it is also important that sufficient data is shared to enable innovations in healthcare. Algorithms should be tested for their validity. Do they provide better care without leading to discrimination or other undesirable outcomes? If an algorithm demonstrably provides better care while it is not transparent how the algorithm works, can it be used?

The various ministries work together to answer these questions. The Digital Government Agenda (NL DIGIbeter), a joint government agenda, addresses transparency and ethical aspects of artificial intelligence. The lessons herein are also helpful for care.

An open conversation

The Ministry of VWS recognises that our health can benefit from AI applications. This requires an open discussion about the consequences of artificial intelligence for patients and healthcare providers. Which values will be strengthened and which will be weakened? What do we find socially acceptable? This is a conversation in which we all have a role to play: citizens, patients, healthcare providers, members of parliament and innovative parties. The Ministry of VWS stimulates that discussion, for example by informing the Dutch House of Representatives about developments and by organising meetings such as the Healthcare Information Council (Informatieberaad Zorg).