The emergence of neurotechnology that can perhaps read and influence our mental states has captured people’s imagination. Technology companies are developing devices for consumers to use at home, in the workplace, or in the classroom. Ethicists and legal specialists warn that cognitive freedom and mental integrity will be threatened if outsiders are able to use neurotechnology to access our mental states.

In this scan, the Rathenau Instituut provides an overview of neurotechnology. We describe the intended applications, opportunities, and risks for public values in the short and longer term, and policy instruments for protecting and promoting those values. The scan concludes by outlining the options that policymakers have for taking action. This Rathenau Scan was produced at the request of the Dutch Ministry of the Interior and Kingdom Relations.

What is neurotechnology?

The brain is a complex organ where various mental states originate – concentration, attention, cognitive skills, thoughts, memories, emotions, and dreams. For decades now, there has been significant investment in research aimed at better understanding the processes within the brain that generate these mental states. In the present scan, we use ‘neurotechnology’ as an umbrella term for various different technologies to measure and/or influence brain activity.

Reason for a Rathenau Scan on neurotechnology

The Rathenau Instituut sees three main reasons for looking closely – especially now – at how far neurotechnology has advanced and at what potential impact it can have on society. First, large technology companies are increasingly entering the field of neurotechnology, expanding the range of consumer devices that work with this technology.

Second, technological developments are underway that will make the non-medical use of neurotechnology more readily accessible. The current pace of progress in artificial intelligence (AI) systems means that neurodata will become easier to interpret for non-medically trained individuals. Moreover, the development of ‘dry’ EEG sensors makes it possible to measure brain activity without having to apply a conductive gel to the scalp, thus considerably improving consumer access to neurotechnology.

The third reason for this scan is the current international ethical and legal discussion of the need to expand the existing frameworks of human rights to include specific 'neurorights'. The central issue under discussion is whether new rules are needed to protect people from having their mental states read out, thus directly influencing and possibly even manipulating their behaviour.

How does neurotechnology work?

To understand how neurotechnology works, it is useful to distinguish between a number of different processes that are involved.

Measurement: The brain contains a large number of neurons (nerve cells) that fire electrical signals when they are active. An active area of the brain also consumes additional oxygen. This kind of physiological activity can be observed and converted into digital data using a measurement system. Different technologies, such as EEG, ECoG or fMRI, measure different types of physiological activity. Neurodata is therefore a digital representation of physiological activity – oxygen consumption and electrical activity – within the brain.

Analysis: Neurodata is interpreted by a professional, a consumer, and/or a computer program so as to draw conclusions about the mental state of an individual. In consumer applications, an AI system is often used to identify and display patterns in neurodata. Analysis of neurodata is successful when it generates useful insights into mental states. Researchers can also merge neurodata from different individuals and perhaps combine it with other kinds of data in order to discover how emotions, preferences, or thoughts work at the level of a group.

Application: Insights into the mental state of an individual or group can be utilised in various different ways. The information that neurotechnology provides about someone’s mental state can be utilised directly (neurofeedback). The technology can also be used for neuromodulation, meaning that it then actually influences the activity of the brain. Neurotechnology can also be used to control a computer system.

One problem with all these technologies is that everyone’s brain generates different, unique brain activity. For more complex mental states – for example memories, dreams, and specific emotions such as homesickness – the neurotechnology needs to be fine-tuned to the specific person's brain.

Applications of neurotechnology

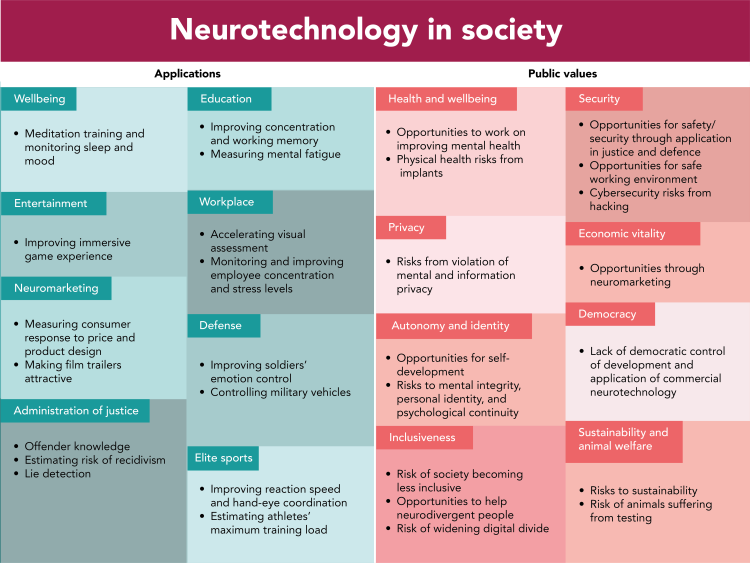

In the present scan, we describe a number of applications of neurotechnologies that are currently the subject of research or experimentation in various fields. In medicine, neurotechnology has a history going back several decades, with applications already having been the object of extensive research, thus affording a better understanding of the opportunities and risks than of those beyond the medical sector; the existing rules correspond to this situation. Our investigation focusses on neurotechnology beyond the field of medicine. More recently, applications have been developed for personal use and in the fields of marketing, law, education, and the workplace (including the defence sector), and elite sports. These fields are subject to different regulations to those for neurotechnology in the medical sector.

The most rapid growth in the use of neurotechnology in the short term will be in ‘dry’ EEG measurement systems that use AI for analysis and that are incorporated into existing hardware such as helmets, headbands, AR/VR glasses, or earbuds. Users can already purchase these with a view to improving their cognitive abilities, mental health, or game experience.

Insights into a person's mental state, such as their attention and preferences, are being utilised to improve marketing. Experimental research is investigating whether neurodata can clarify what someone remembers. This application may be useful in the administration of justice. Experiments have shown that, in specific circumstances, neurofeedback can help improve educational performance, concentration in the workplace, and the performance of top athletes and military personnel. How these results hold up in the world outside the setting of a laboratory still needs to be studied more closely.

Neuromodulation is not yet used outside the medical field. Controlling devices by using EEG is already applied on a small scale in gaming, and is being investigated for various defence applications, such as driving a military vehicle.

Opportunities and risks for public values

We note that various elements of neurotechnology in the non-medical field entail both opportunities and risks where public values are concerned. For individuals, a better understanding of mental states – such as the ability to concentrate – can have positive effects on mental health. Neurotechnology can also contribute to personal development, for example by improving pupils' ability to learn in an educational setting. It may also be possible to use insights gained from neurodata to improve marketing for commercial or public purposes, resulting in economic vitality and prosperity. We also note, however, that at present claims for the potential of neurotechnology cannot always be substantiated scientifically.

At the level of society as a whole, neurotechnology could improve safety by monitoring people in dangerous occupations so as to measure their alertness and concentration, and by also enhancing military capabilities.

The downside of these potential benefits for the individual are the risks they involve as regards the privacy of information and mental privacy. A measurement system can be hacked and used in an attempt to obtain personal information. One experiment proved that it was possible to arrive at a good estimate of someone's PIN by showing them pictures of numbers and analysing their neurodata.

By risks to mental privacy, we mean keeping mental states private – such as particular thoughts – that you do not wish to reveal voluntarily. Interesting experiments have been performed in which subjects were able to reconstruct observed images or sounds entirely from neurodata. Nevertheless, the possibilities for ‘mind reading’ are currently limited and it is also unlikely that our personal thoughts will become easily accessible in the future. According to the experts, human experience and thoughts about it cannot be reduced to physiological activity within the brain and the neurodata derived from that activity. Being aware of these subtle distinctions is important in assessing the risks that neurotechnology poses in terms of mental privacy.

Even so, neurotechnology can indeed pose risks if neurodata is used in a way contrary to the interests of a user or the public interest. What is derived from neurodata need not be entirely consistent with actual mental states in order to have harmful consequences. Working with faulty interpretations may lead to a miscarriage of justice or an incorrect assessment of someone’s alertness in the workplace.

There are also risks associated with the use of AI systems in neurotechnology. A bias in the training data can disadvantage certain groups of people because the technology fails to work for them, or fails to do so effectively. Deploying AI systems can also be at the expense of autonomy. When an AI system directly anticipates patterns in neurodata, it is unclear who is responsible for making a decision.

Widespread implementation of neurotechnology may perhaps lead to opportunities for increasing inclusiveness if neurodivergent individuals are helped to operate more successfully within society. There is also a risk, however, that differences between people will be less accepted because they can be treated. It may be possible in future to correct what is at present regarded as anxiety. That can help individuals, but it can also lead to a greater tendency to regard symptoms as something to be ashamed of.

Short-term and long-term risks

The main opportunities and risks of neurotechnology seem to fall into roughly two groups. Consumers can already benefit from wearable, non-invasive neuroimaging technology such as EEG. The risks that this technology entails are similar to those that have already been the object of discussion where other data privacy issues are concerned, but this technology goes a step further by literally and figuratively getting ‘up close and personal’.

Other neurotechnologies – such as invasive brain implants, devices that directly influence (‘modulate’) the brain, and non-wearable imaging neurotechnology – offer more options for imaging or influencing mental states, but these are still very much at the development stage, are often less user-friendly, and are required to comply with strict medical guidelines. The wide-ranging risks to society associated with these neurotechnologies are therefore of a longer-term nature and involve greater uncertainty, but they are in fact much more far-reaching.

Policy analysis

Given the potential opportunities and risks, it is important to put policies and regulations in place so as to prepare for the societal effects that neurotechnology may have.

Unclear privacy legislation

For the short term, there are risks associated with collecting, storing, processing, and analysing neurodata. It is the EU’s GDPR and the AI Regulations, in particular, that apply here. It is probable that this legislation provides insufficient protection – outside certain specific contexts such as health or politics – for neurodata and the information that can be derived from it about personal preferences. It is unclear when neurodata falls into the category of health data or ‘special personal data’ in the GDPR, so that additional protection is thus provided for the privacy of those concerned. Non-pathological, emotional information and affective mental states may well fall outside the scope of that protection.

Neurodata also involves inherent challenges with regard to the basic requirements of the GDPR, namely transparency and informed consent, proportionality, data minimisation, purpose limitation, and accuracy. When the neurodata is subsequently analysed, the AI Regulation may play a role, although this applies only to certain highly specific applications, is subject to conditions that are vaguely formulated, and involves numerous exceptions and a system of self-assessment. Much will depend on how the standards and regulations are implemented.

Neurorights

There are certain issues where the legislation appears to fall short, with insufficient mitigation of the risks, particularly when neurotechnology is utilised on a large scale. This involves risks to the privacy of information (protection of personal data) and mental privacy (keeping all the aforementioned mental states private), autonomy and identity, physical safety and mental health, democracy, and inclusiveness.

Current discussion of neurorights focusses in particular on the issue of whether all the mental states of individuals referred to are sufficiently protected – in the light of emerging neurotechnology – by the current national, EU, and international human rights frameworks. There is as yet no consensus on this. First, it is debatable whether human rights frameworks can adapt sufficiently to the new situations that developments in neurotechnology may entail, or whether changes will be necessary. Second, opinions differ as to whether we already need to respond to developments in neurotechnology that may not actually materialise for a long time, if ever, for example, the covert reading of people's thoughts. Third, it is open to question which is more important: should people be free to improve their mental states with the aid of neurotechnology or should they be protected from outside interference in those mental states?

The Rathenau Instituut concludes that these short-term opportunities and risks are associated with the large-scale market introduction of portable neurotechnology that is easy for non-medically trained individuals to use. The large-scale collection and processing of neurodata involves risks for which it is unclear whether current Dutch, European and international regulations offer sufficient protection. If neurotechnologies develop significantly in the long term, it may become possible to read, influence and manipulate mental states with greater accuracy. In that case, public values associated with fundamental aspects of being human, such as autonomy and identity, will come under pressure.

We conclude that various policy instruments provide some degree of protection against risks to public values from neurotechnology, but not completely. There are several courses of action for protecting and realizing public values in relation to the short-term impact of wearable, non-invasive technology, and the uncertain long-term impact of other neurotechnologies. These are summarised in the figure below.