AI in care: implications for education

In the blog series 'Healthy Bytes' we investigate how artificial intelligence (AI) is used responsibly for our health. In this fifth part, Heleen Miedema, Katja Haijkens and Govert Verhoog talk about the implications of the increasing use of technology in healthcare for the training of healthcare professionals. As programme directors of the studies Technical Medicine, Biomedical Technology and Health Sciences at the University of Twente, they believe it is important for students to develop a critical, reflective attitude towards AI and other technology in healthcare.

In short

- How is AI used responsibly for our health? That is what this blog series is about.

- The University of Twente feels responsible for helping students understand the social significance of a technological development, so that they will be able to apply it adequately.

- This requires students to develop a critical, reflective attitude towards technology.

Looking for good examples of the application of artificial intelligence in healthcare, we asked key players in the field of health and welfare in the Netherlands about their experiences. In the coming weeks we will share these insights via our website. How do we make healthy choices now and in the future? How do we make sure we can make our own choices when possible? And do we understand in what way others take responsibility for our health where they need to? Even with the use of technology, our health should be central.

Back to basics for responsible AI

Developments in ICT have made it possible to collect a lot of data and build datasets with it. AI is the next step in ICT development. Automatic decision systems can use AI to discover patterns in extensive data and thus support decisions, for example in the healthcare sector. It is important that you understand the context of the data and the way in which the data has been generated, in order to be able to better interpret the outcomes.

In order to use AI responsibly in healthcare, it is necessary to go back to the basics: to the underlying data and the way it is processed. Can you extract the information needed from this data? How is this data stored, what are the criteria for this and for what purpose? Another basic question is which decisions AI should support. You can have the right database, but what kind of decisions should computers make with it? What is the importance of the automatic decision systems and what kind of support is really needed?

It is important to realise that not one group in society is responsible for the development of AI. Different groups of people from different disciplines need to be involved in technological developments. It is not only about what is technologically possible, but also about what society wants to achieve with that technology and how its application can improve healthcare.

It is important to realise that not one group in society is responsible for the development of AI.

It is the university's responsibility to ensure that students know where a technology comes from, so that they can also understand the social significance of the next technological development. It is also the university's responsibility that students can relate to the perspective of patients so that they can optimally link healthcare technology and health care.

The perspective of the patient or client

The intention is for students of technical medicine, biomedical technology and health sciences at the University of Twente to develop a broad perspective on technology. They learn to have an eye not only for the technology itself, for money or regulations, but also for the patient or the client.

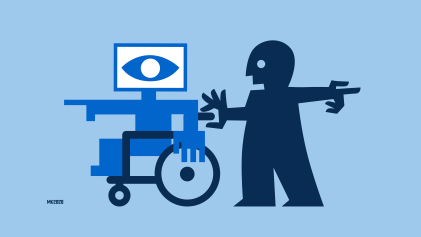

Recently, students from various countries were challenged to design a wheelchair. A group of students developed a brilliant wheelchair, which could do anything technologically possible, but was so heavy that no grandmother would use it. Other students designed a wheelchair from the perspective of a blind boy who had difficulty finding his way around the corridors of a school with a constantly changing timetable. This wheelchair had only one function: to help the boy move from one classroom to another, based on the most up-to-date timetable.

The students who developed the second wheelchair understood what it was all about. They asked themselves what the problem was that could actually be tackled with the help of technology. Students need to be trained to develop products such as the second type of wheelchair.

A critical, reflective attitude

How responsible a technology is depends on the designer and their perspective on the world. Students need to be aware of this. They develop a critical, reflective attitude towards AI and other technology in healthcare. Students ask themselves questions such as: what is the promise of technology? What possible other (and unintended) applications does this technology have? What are its advantages, disadvantages and risks?

Anyone who blindly relies on the technology can be put on the wrong track. A 3D-echo device, for example, generates an image of reality based on sound waves. It is not certain whether the image actually reflects reality, or whether it is only a marginal representation of it. The fact that something cannot be seen on an ultrasound scan does not mean that it really isn't there. Care professionals need to be aware of this.

The fact that something cannot be seen on an ultrasound scan does not mean that it really isn't there.

Moreover, much of the technology now being used in healthcare was not originally designed for use in healthcare. Robotics in the car industry is something different from robotics in healthcare. Every car of a certain type is identical to another car of that type, but every human being is unique. The average human exists only in statistics. This is another reason for (future) healthcare professionals to develop a critical attitude towards the technology they use.

A doctor is a medical expert, not a technology expert. He or she has had no mathematics, mechanical engineering, ICT or physics in his training. But in his work, technology has a major impact on his actions. It is a challenge, both for the training of healthcare professionals and for the development of healthcare technology, to take this into account.

It can be a pitfall to automatically assume that people understand something of technology. We expect a lot from everyone, while not realising what is needed in terms of information, training and education to use AI responsibly in care. Properly trained professionals: that is the aim of the University of Twente with its Health Sciences, Biomedical Technology and Technical Medicine programmes.