Artificial intelligence in healthcare: deciding together is crucial

In the blog series 'Healthy Bytes', we investigated how artificial intelligence (AI) is used responsibly for our health. Over the past four months, this blog series has been looking for good examples so that we can learn how professionals, patients and society can shape the responsible use of AI. Different parties - policymakers, educators of healthcare professionals, AI developers, entrepreneurs, researchers and investors – gave their viewpoints in a total of ten blogs. We asked them what they think socially responsible AI innovation should look like. And what impact AI will have on the organisation of healthcare, on healthcare professionals and on healthcare users. In this concluding blog, we look back on the insights we have gained.

In short

- How is AI used responsibly for our health? That's what this blog series is about.

- In the blog series, ten stakeholders with different perspectives on the development of AI for health care have shared their viewpoints.

- In this final blog, we conclude that in order to develop responsible AI for care and health, it is especially important to work together and decide together.

Looking for good examples of the application of artificial intelligence in healthcare, we asked key players in the field of health and welfare in the Netherlands about their experiences. In the coming weeks we will share these insights via our website. How do we make healthy choices now and in the future? How do we make sure we can make our own choices when possible? And do we understand in what way others take responsibility for our health where they need to? Even with the use of technology, our health should be central.

Socially responsible innovation with AI in healthcare

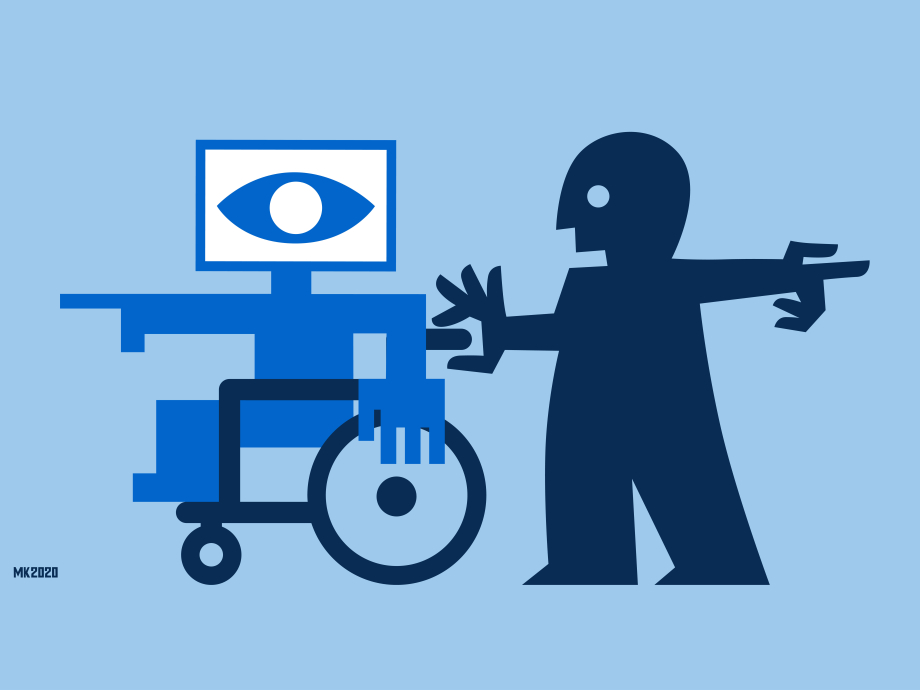

In the opening blog, we stated that responsible use of AI means that our health is central and that patients can make their own choices. AI can support us in these choices. But the more we rely on technology, the more topical the question 'who decides?’ becomes. Guarantees are needed in automatic decision systems to safeguard values such as autonomy, privacy, and an inclusive society.

In a series of blogs, ten different stakeholders have answered the question: using socially responsible AI in healthcare - what does that really mean? If we summarise what the different stakeholders in the blog series have said, it is this: AI should have a positive impact on the quality of care and at the same time safeguard public values. Many different values are mentioned in the blogs. Such as having a say, autonomy, accountability, privacy, an inclusive society, transparency, solidarity and non-discrimination. Ron Roozendaal (VWS) calls responsible innovation with AI in healthcare a 'trade-off between the quality of care and public values'. This balance requires an open discussion with all stakeholders.

How can socially responsible AI be used in practice?

Socially responsible AI also has to do with the way AI is used in practice. Does the situation lend itself to the use of AI? And does AI support the work of healthcare professionals, or does AI only make the work more complex? Henk Herman Nap and Dirk Lukkien (Vilans) and Carine van Oosteren (SER) therefore argue that we should learn from good examples from practice. For instance a tool that, based on analysis of the text in the Electronic Client Dossier, supports medical professionals with determining the client’s care needs. And Heleen Miedema, Katja Haijkens and Govert Verhoog (University of Twente) teach their students to adopt a critical, reflective attitude towards technology. For example, with regard to the use of a 3D-echo machine, which only gives a limited picture of reality. If something cannot be seen on an ultrasound image, it does not necessarily mean that it is not there. According to social scientist Antoinette de Bont (Erasmus University), socially responsible use of AI is about its legitimate use. This means not only that the use is in accordance with the rules, but also that it is socially and morally legitimate. Citizens need to agree to the purpose for which AI is used and users need to know what they are using and why.

According to the various parties in this blog series, there is no central role for technology in achieving socially responsible innovation. According to Nico van Meeteren and Hanneke Heeres (Health-Holland), health should not be central either. What matters is that people can participate in society, despite a chronic illness, and that technology supports them in doing so. A portable artificial kidney is a good example. This will give kidney patients much more freedom in their daily lives in the future, because they will no longer be tied to kidney dialysis in the hospital.

Data raises several questions in relation to socially responsible AI.

Of course, AI's socially responsible innovation also has to do with data. Are patients' data safe with start-ups and companies that drive AI's innovation in healthcare? What happens to these data when a company goes bankrupt, Carine van Oosteren (SER) wonders. Is the data actually suitable for extracting the information you need? When collecting data and developing AI, have values such as privacy, medical confidentiality and equality been taken into account?

Dutch legislation sets strict, specific requirements for the re-use of data collected within a treatment relationship between doctor and client. Does the training of an algorithm with this confidential data serve public health and can the data therefore be used for this purpose? According to lawyer Joost Gerritsen, this question should be discussed in public debate. And can the healthcare professional understand the results of the data analysis, such as support in a decision or diagnosis, and apply them in her practice? Professor of machine learning Max Welling (University of Amsterdam, Qualcomm) emphasises that logistics must first be developed in a European context before AI can make real progress in healthcare. These logistics should ensure that data is collected and used in a safe and systematic way. This requires overarching European legislation for the healthy use of data.

Work together, decide together

The blog series 'Healthy Bytes' showcases that socially responsible innovation of AI in healthcare is not easy. Different parties see all kinds of challenges. Peter Haasjes (NextGen Ventures) and Jörgen Sandig (Scyfer) show that it is difficult to develop a good business case. To successfully implement AI in healthcare, it has to benefit healthcare. For example, improve the quality of care or make it more efficient. But it also has to provide something financially. For healthcare organisations, the challenge is to fit AI systems into existing work processes in such a way that they help to make better decisions or reduce the workload. Healthcare professionals need to learn to work with a new type of technology and trainers need to prepare them for this.

In order to overcome these obstacles and implement AI in a socially responsible way in care practices, it is important to work together. The parties who have spoken in this blog series also emphasise the importance of cooperation. For example, between developers and end-users, so that the systems being developed address a 'real' problem in healthcare practice or help prevent someone from becoming ill. Or, for example, collaboration between venture capital, start-ups and future users to develop a good business case: an application that improves care in one way or another and that delivers financial benefits. Collaboration between technical and social scientists to ensure that applicable rules are correctly interpreted and complied with. Or to determine whether an algorithm is desirable and suitable for application in healthcare. And collaboration between policymakers and the public, to ensure that the development of AI applications that address the important issues in healthcare are stimulated. And that public values are properly safeguarded in these applications.

How can AI be used responsibly in the future?

AI in healthcare: who decides? It is still far from clear what AI systems will do in healthcare and what role they will play in healthcare practice. We are still at the beginning of developments. Together we have to decide what form AI will take in healthcare. Health, welfare and the ability to participate in society must always be at the heart of this.